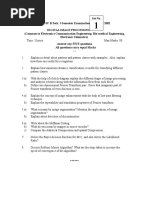

Ecognition Software Manual

Trimble® ecognition® essentials software allows users at any skill level to quickly produce high-quality, gis-ready deliverables from imagery. These tutorials will help you understand the principals and practices of object-based image analysis using the eCognition software package.

Object based nearest neighbor classification (NN classification) is a super-powered supervised classification technique. The reason is because you have the advantage of using intelligent image objects with multiresolution segmentation in combination with supervised classification. What is multiresolution segmentation? • MRS produces thin and long objects for roads. • MRS creates square objects for buildings. • MRS generates varying scale objects for trees and grass. Kind of like this Multiresolution Segmentation Objects You can’t get this using a pixel-by-pixel approach Nearest neighbor classification allows you to select samples for each land cover class.

You define the criteria (statistics) for classification and the software classifies the remainder of the image. Nearest Neighbor Classification = Multiresolution Segmentation + Supervised Classification Now, that we have an overview.

Let’s get into the details of a nearest neighbor classification example: Nearest neighbor classification example This example uses the following bands: red, green, blue, LiDAR canopy height model (chm) and LiDAR light intensity (int). Plumbing Cad Drawings more. Object Based Image Classification Layers. Wowza Crack.

1 Perform multiresolution segmentation Pixel-based land cover classification Humans naturally aggregate spatial information into groups. When you see the salt-and-pepper effect in land cover, it’s probably because a pixel-based classification was used. Multi- resolution segmentation is why has emerged for classifying high spatial resolution data sets. MRS creates objects into homogeneous, smart vectors. We can see roads, buildings, grass and trees as smart objects after multiresolution segmentation. This is why multiresolution segmentation has more value than classifying pixel-by-pixel.

Action: In the process tree, add the multiresolution segmentation algorithm. (Right-click process tree window >Append new >Select multiresolution segmentation algorithm) This example uses the following criteria: • Scale: 100 • Shape: 0.1 • Compactness: 0.5.

Action: Execute the segmentation. (In the Process Tree window >Right-click multiresolution segmentation algorithm >Click execute) Scale: Sets the spatial resolution of the multiresolution segmentation. A higher value will create larger objects.

Shape: A higher shape criterion value means that less value will be placed on color during segmentation. Compactness: A higher compactness criterion value means the more bound objects will be after segmentation. Best advice here is trial and error. Experiment with scale, shape and compactness to get ideal image objects. As a rule of thumb, you want to produce image objects at the biggest possible scale, but still be able to discern between objects.

2 Select training areas Now, let’s “train” the software by assigning classes to objects. The motive is that these samples will be used to classify the entire image. But what do we want to classify? What are the land cover classes? Action: In the class hierarchy window, create classes for buildings (red), grass (green), paved surfaces (pink) and treed (brown). (Right-click class hierarchy window >Insert class >Change class name >Click OK) Action: Let’s select samples in the segmented image.

Add the “samples” toolbar (View>Toolbars). Select the class and double-click objects to add samples to the training set. Define Land Cover Samples Once you feel that you have a well-represented number of samples for each class, we can now define our statistics. Note, we can always return to this step and add more samples after. 3 Define statistics We now have selected our samples for each land cover class.

What statistics are we going to use to classify all the objects in the image? Defining statistics means adding statistics to the standard NN feature space Action: Open the Edit Standard NN window.

(Classification >Nearest Neighbor >Edit Standard NN). This example uses red, green, blue, LiDAR canopy height model (chm) and LiDAR light intensity (int) bands.

This takes a bit of experimenting to find the right statistics to use. This example uses the statistics below. 4 Classify • Action: In the class hierarchy; add the “standard nearest neighbor” to each class. (Right-click class>Edit >Right-click [and min] >Insert new expression >Standard nearest neighbor) • Action: In the process tree, add the “classification” algorithm. (Right-click process tree >Append New >Select classification algorithm) • Action: Select each class as active classes and press execute. (In parameter window >checkmark all classes >Right click classification algorithm in process tree >execute) The classification process will classify all objects in the entire image based on the selected samples and the defined statistics.